The following is part of a series of thought pieces authored by members of the START Consortium. These editorial columns reflect the opinions of the author(s), and not necessarily the opinions of the START Consortium. This series is penned by scholars who have grappled with complicated and often politicized topics, and our hope is that they will foster thoughtful reflection and discussion by professionals and students alike.

The National Consortium for the Study of Terrorism and Responses to Terrorism (START) is preparing to release its annual update to the Global Terrorism Database (GTD), the largest and most comprehensive data collection devoted to worldwide incidents of terrorism. Users of the GTD will be happy to find that the update brings with it several notable additions to the database, such as international/domestic variables, attack target subtypes, and complete geocoding for incidents that occurred in 2012.

What may not be obvious to users of the database, however, is that the current update and its associated additions were largely made possible by significant improvements to the data collection methodology and technologies that the GTD team uses to compile the database.1 The point of this short discussion piece is to describe these improvements, detail the benefits that were derived from them, and to explore the often unforeseen challenges that data collectors face in ensuring that their data remain accurate and consistent in light of advancements in data collection tools and practices.

In the spring of 2012, START’s leadership decided to move the ongoing collection and coding of the GTD, which had previously been done by outside vendors, to START’s headquarters at the University of Maryland. This decision was driven by a desire among START staff to make improvements and updates to the database on a more regular basis, and it was quickly

reinforced by a new contract to provide the statistical annex for the State Department’s annual Country Reports on Terrorism.

The decision to move the collection of the GTD in-house entailed significant changes, both in terms of the resources that were needed to successfully make the transition, and the advances in collection methods and technologies that had to be made in order to achieve the desired improvements to the database.

Three areas related to data collection and coding in particular were identified early on as candidates for improvement: (1) the population of sources that are used to compile the database; (2) the procedures that are used to identify potentially relevant information in those sources; and (3) the workflow and technologies that are used to identify and code the events that are included in the GTD.

Upon moving the collection in-house, the GTD team immediately realized that in order to continue providing users with the most comprehensive terrorism event database in the world, the GTD would have to cast a wide net when it comes to the number and types of news sources that are used for compiling the database. Having a diverse and large number of sources to draw on allows the GTD team to identify the universe of events that meet the database’s inclusion criteria, and also helps ensure that the team has the best and most up-to-date information available to accurately code over 120 variables, which range from location to perpetrators to casualties, for every incident that is included in the GTD.

Instead of relying on the common practice of performing targeted searches against a few well-known news sources or news aggregators—a technique that was used in the past by vendors tasked with compiling the GTD—the GTD team ultimately decided that the best way forward was to start with an extensive pool of news articles culled from myriad sources.

Those articles could then be filtered based on carefully crafted Boolean search terms, leaving a population of news stories likely to include content about terrorism or other forms of political violence. Currently, the GTD downloads on average 1.3 million news articles per day from a pool of over 55,000 unique sources. Of these news articles, approximately 7,000 typically include some information about terrorist attacks or related topics.

As one might expect, the articles that remain after the Boolean filters have been applied include a healthy dose of duplicate stories, as well as news reports that are, at best, tangentially related to terrorist attacks. To deal with these issues, and in the interest of compiling a manageable population of news articles for review by human coders, the GTD team decided next to make significant improvements to the procedures that it uses to identify the set of unique articles in the pool that are most likely to provide accounts of actual incidents of terrorism.

As a first step in this process, the GTD team uses natural language processing (NLP) techniques to measure and compare the cosine similarity of article pairs with the hopes of eliminating duplicate articles. Essentially, for each article in the pool, a word histogram based on the frequency of unique terms, as well as two and three word phrases, is drawn in order to determine the articles’ unique fingerprints.

The fingerprints of article pairs are then compared to determine their similarity. If a predetermined threshold for content similarity between the pair of articles is met or passed (in the GTD’s case, a similarity score of .825), one of the articles is removed from the pool as a duplicate story.

After de-duplication, the pool of articles left for review typically stands at about 2500 per day. While more manageable, this set is still quite large, and it likely contains stories that, while possibly related to terrorism, are not necessarily about individual attacks. In the interest of efficiency and accuracy, the GTD staff next uses machine learning tools in order to further refine the pool of articles down to those that are most likely to describe unique incidents of terrorism.

Post-de-duplication articles are run through an algorithm that judges them in terms of the likelihood that they describe terrorist attacks, and assigns them a relevancy score on a scale of -2 to 2, with 2 being an article that almost certainly contains information about an incident of terrorism, and -2 being a story that satisfies the Boolean filters, but does not necessarily describe an act of terrorism. All articles that are assigned positive relevancy scores are designated by the model to be read by human coders. This process typically leaves about 550 articles per day to be read by the GTD staff.

While START researchers are quite interested in the potential benefits of automated coding practices, such as those used to compile the Global Database of Events, Language, and Tone (GDELT), years of data collection experience have reinforced a belief among the GTD staff that there currently are no substitutes for human interpretation when it comes to constructing a data collection that is as expansive and complex as the GTD.3 For this reason, a decision was made to re-conceptualize the GTD’s workflow and to devote significant resources to developing a set of tools that would make it possible for the GTD staff to process a large volume of news articles on a daily basis without sacrificing any of the attention to detail that is the backbone of the GTD’s comprehensiveness and accuracy.

Several important changes have been made to the GTD’s workflow, but one in particular that may be of interest to others who mange their own large data collections was a decision to reorganize the GTD coding teams around variable domains as opposed to regions of the world. Instead of having teams that are dedicated to identifying and coding all attacks that occur in particular parts of the world, GTD coding teams are now organized to code a specific set of variables, such as those related to attack type and weapons, targets and perpetrators, casualties, and so on, for all attacks, regardless of their geographic location.

This change has allowed the GTD to achieve a level of inter-coder reliability that was difficult to attain when individual coders were responsible for knowing the coding nuances for more than 120 variables. It has also allowed the GTD team to streamline the coding of thousands of unique terrorism events, making sure that the ongoing collection proceeds at a reasonable pace.

In addition to workflow changes, the GTD team also realized that new tools would be needed in order to handle a significantly increased volume of news articles and to make possible the simultaneous coding of single incidents by various domain teams. The standard practice of maintaining folders full of individual article PDFs and Microsoft Excel spreadsheets was no longer a viable option for the GTD. To this end, the GTD staff developed innovative data management software that allows coders to perform all of the tasks associated with constructing the database—reading news articles and identifying attacks, clustering related news stories and incidents, discarding redundant articles, coding an extensive set of variables for each case, and performing routine quality control on the data—all in one easy to use interface.

The software achieves its integration and efficiency in part by using data mining techniques, such as clustering and named entity recognition (NER), to automatically extract pertinent information from news articles. It then uses that information to form linkages between similar articles and incidents. The GTD’s Data Management System (DMS) has profoundly changed the way that the GTD is compiled, and it, more than any other change, has made the massive expansion of recent collection efforts possible.

Screenshot of the GTD’s Data Management System (DMS), November, 2013.

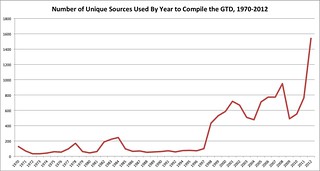

The changes in data collection methodology that have been made in recent months by the GTD team have resulted in a number of significant improvements to the overall quality of the database. Perhaps most importantly, the GTD team is now able dig deeper than previously anticipated in order to find incidents for inclusion in the database. To illustrate this point, consider that, prior to 2012, identifying a year’s worth of terrorist incidents for inclusion in the GTD typically involved the use of approximately 300 unique news sources. By comparison, the 2012 update is based on a pool of over 1500 unique news sources. These sources range from well-known international news agencies to English translations of local papers written in numerous foreign languages.

In addition to improving the comprehensiveness of the collection, this large and eclectic set of sources is also helping to ensure that the information being recorded in the GTD is as up-to-date and accurate as possible. This goes for legacy cases as well.5 While in years past the GTD team has updated legacy data through supplemental collection projects, these efforts have been somewhat sporadic and contingent on the staff finding the time to break away from the ongoing collection to research historical incidents. The large and diverse pool of sources that the GTD team now has access to may make it possible to update legacy cases more regularly as new information about the attacks becomes available.

Unique Sources Used By Year to Compile the GTD, 1970-2012

These methodological changes promise certain future benefits as well. Most importantly for end users, it will now be possible to release annual updates to the database closer to real-time. Recent updates to the GTD have been made approximately one year after the final recorded date in the data. Thus, the December 2013 update to the GTD will include incidents through December 2012. Given consistent and sufficient funding, with the methodological changes that have been made, the GTD team believes that it can now cut that lag time in half and release annual updates approximately six months after a year’s end.

While the benefits associated with these methodological changes are truly remarkable, it is important to recognize that they have brought with them certain challenges for ensuring data consistency. When dealing with a data collection that spans several decades, as does the GTD, it is important to be aware that changes in technology and communications, such as those brought on by the advent of the Internet and the rise of a globalized media, can have a notable impact on observable year-to-year trends in the data. Changes in data collection methodology can have similar effects.

Shortly after implementing these methodological changes, the GTD team noticed a sizable increase in the frequency of terrorist attacks over the previous year, and this trend continued through to the end of the 2012 data collection effort. The GTD team immediately began to wonder how much of this increase was driven by real world developments and how much of it was a product of the methodological advancements that had been made in previous months. While there is no simple answer to this question, what is certain is that by the start of the 2012 collection effort, the staff working on the GTD had become better than ever at identifying terrorist attacks, regardless of where they happened to occur.

It was also evident at this time that notable international developments were having a significant impact on the frequency and lethality of terrorist attacks in numerous parts of the world. Consider Nigeria, for example. 2012 was a watershed year for the country, which found itself unprepared to deal with the rapid emergence of Boko Haram as one of the world’s most active and deadly terrorist organizations. It is not surprising to find that terrorist activity in Nigeria in 2012 was nearly four times greater than it was in 2011 when Boko Haram was still a nascent organization.6

This story is true for many parts of the world, and these trends are reflected in the data. In fact, the GTD shows that terrorist activity has been increasing around the world for several years, and that this trend began well before the GTD team implemented the methodological changes that are described in this discussion point.

With that said, the GTD team believes that some portion of the observable increase in terrorist activity since 2011 is the result of new advancements in collection methodology. As a result of these improvements, a direct comparison between 2011 and 2012 data likely overstates the increase in total attacks and fatalities worldwide during this time period. Caution should be used when drawing conclusions about what this increase means for the state of international security or global counterterrorism efforts.

Striking an appropriate balance between methodological advancements and data consistency is not a new challenge for data collectors. Over the years, the Federal Bureau of Investigation (FBI) and state and local law enforcement agencies have made numerous improvements to the procedures and technologies that are used to compile the annual report, Crime in the United States.

As a result of these changes, as well as the complexity of the factors that drive patterns of criminal behavior, the FBI cautions users of the report against making simplistic comparisons or drawing general conclusions without first considering the impact that collection methodology and issue complexity can have on observable trends in the data.7

With the information that is detailed in this discussion piece, the GTD team hopes that end-users of the database will employ similar caution when using the GTD to make sweeping claims about patterns of worldwide terrorist activity.

The challenges to data consistency notwithstanding, the GTD team is excited about the recent changes that have been made to its collection and coding practices, and we believe that users of the database stand to benefit tremendously from them as the database becomes more comprehensive and accurate, and updates to the collection become more timely.

The GTD staff also hopes that through its transparency and commitment to open dialogue, the community of researchers tasked with collecting large amounts of data for diverse end-users will begin to work together to advance collection methodology and technologies, while at the same time being cognizant of, and honest about, the challenges for data consistency that arise whenever such improvements are made. Hopefully this discussion piece can serve as the start of that dialogue.8

Footnotes

1 Generous funding from the Office of University Programs at the Department of Homeland Security Science and Technology Directorate and the United States Department of State were also instrumental in making this release and its additions possible.

2 This threshold was determined after extensive manual review for false positives and false negatives.

3 For more on GDELT and the promises and pitfalls of automated coding, see Peter Henne and Jonathan Kennedy, “Embrace ‘Big Data,’ but don't ignore the human element in data coding,” START Newsletter (October, 2013), found at: http://www.start.umd.edu/news/discussion-point-embrace-big-data-dont-ignore-human-element-data-coding

4 Translated news articles are provided by the Office of the Director of National Intelligence’s Open Source Center.

5 Previous efforts to promote internal consistency and completeness in the database include applying definitional inclusion criteria across all phases of the previous data collection, as well as conducting numerous supplemental data collection efforts to address known deficits in the legacy data. Decisions to add or remove cases from the GTD are often a result of these efforts, and should not be taken as evidence that the GTD’s definition of terrorism has changed over time. The GTD has adhered to the same definition of terrorism since the database was founded.

6 Boko Haram was responsible for more than 400 of the 597 unique terrorist attacks that occurred in Nigeria in 2012. By comparison, in 2011, Boko Haram was responsible for 124 of the 173 incidents that occurred in the country. For more information about Boko Haram and its activities in Nigeria, see START’s recent background report on the group, FTO Designation: Boko Haram and Ansaru.

7 The Federal Bureau of Investigation. Crime in the United States, 2012. Found at: http://www.fbi.gov/about-us/cjis/ucr/crime-in-the-u.s/2012/crime-in-the-u.s.-2012/resource-pages/caution-against-ranking/cautionagainstranking

8 As a way of fostering this exchange, users of the GTD are encouraged to use the feedback portal that can be found at:http://www.start.umd.edu/gtd/features/HowIUseGTD.aspx